As Google continues to improve its search algorithm, SEO practices must rapidly and consistently evolve to stay competitive. But if there’s one aspect to SEO that has seen the greatest change over the shortest period of time, it’s technical SEO.

Technical SEO is a bit of a rabbit hole, starting with implementing proactive measures to anticipate the foreseeable, to frantically creating reactive strategies to fix the broken. Whether preparing for a new site launch or auditing a site’s current health, having a technical SEO checklist in place can help to keep you organized and on track.

At Captivate, we aren’t strangers to the technical challenges accompanying SEO. In fact, we are always involved in a number of technical clean-up projects to help reverse traffic drops across multiple sites. The advantages of Technical SEO inspired us to share our ten-step technical SEO checklist.

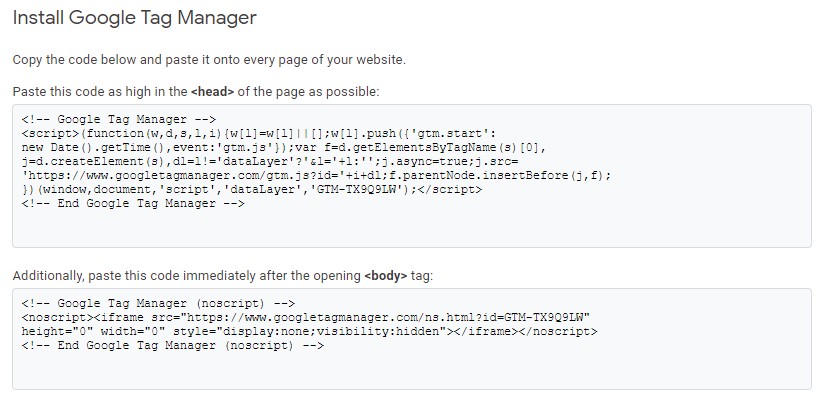

1. Validate Google Search Console, Analytics, & Tag Manager

While this first step might be obvious to some, it’s essential to underscore the importance of having foundational analytics and tracking tools in place. Just think about the overused jokes like, “is it plugged in?” and “try turning it off and on again”— the foundational and seemingly easy items are sometimes overlooked, causing unnecessary headaches later on.

Having tools like Google Analytics, Google Search Console (formerly Webmaster Tools), and Google Tag Manager properly set up and configured is critical to pinpointing issues as they arise. These tools also cannot be considered as “one-and-done” type implementations. We recommend reviewing the alerts and messages generated by these tools regularly to stay ahead of possible problems.

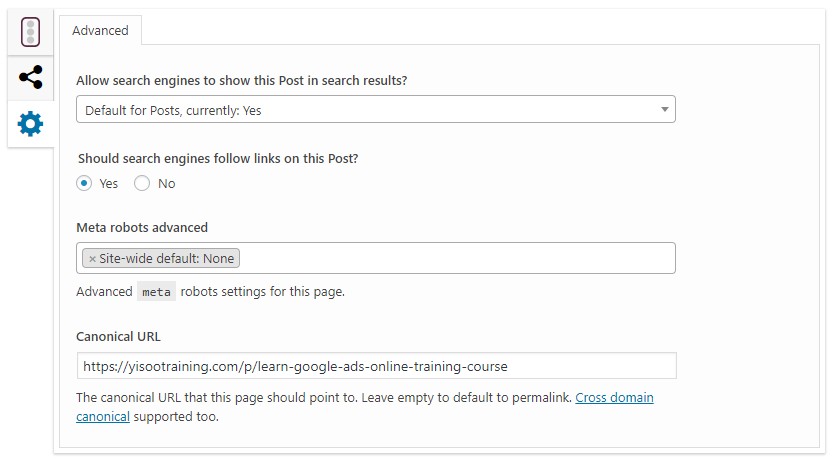

2. Canonicalize All Pages

If there’s one issue we have experienced time and time again, it’s finding multiple versions of the same page. In fact, we’ve encountered sites with over five different URL versions of the same page stepping on each other. When we see this problem, it’s usually due to a faulty set up of the server or a shaky content management system. For example, one project had the following URLs generated for its ‘About Us’ page:

- https://site.com/about-us/

- https://www.site.com/about-us/

- https://www.site.com/about-us

- https://site.com/about-us

- http://www.site.com/about-us

To a search engine, the above looks like five separate pages, all with the exact same content. This then causes confusion, or even worse, makes the site appear spammy or shallow with so much duplicate content. The fix for this is canonicalization.

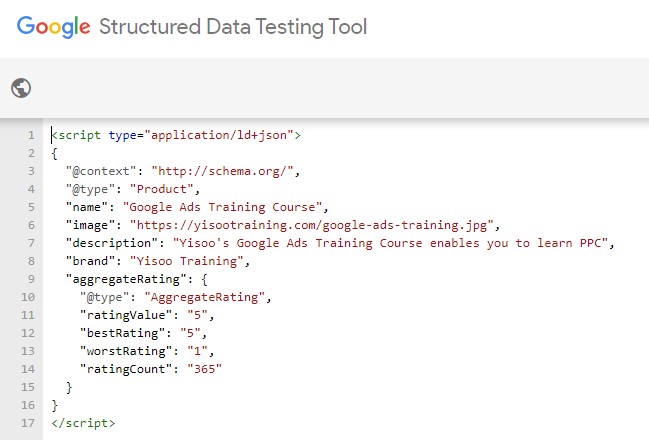

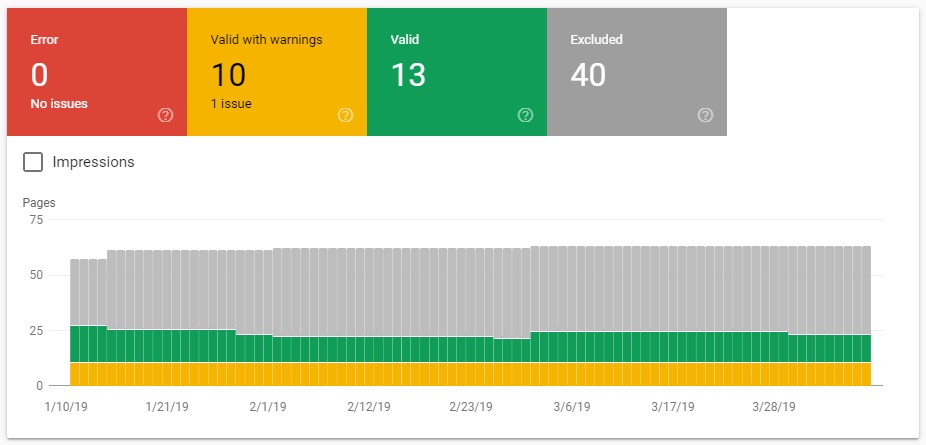

3. Implement Proper Structured Data Markup

Implementing Structured Data markup has become the new gold standard for technical SEO practices. In its very essence, Schema is a unique form of markup that was developed to help webmasters better communicate a site’s content to search engines.

From a company’s address and location information to reviews and aggregate ratings, with Structured Data, you can precisely define pieces of content so Google and other search engines can properly interpret what the content means. In turn, your site can benefit in the search results with rich snippets, expanded meta descriptions, and other eye-catching elements offering a competitive edge. Google Search Console will also log any errors it sees with Structured Data, and provides a validation tool to help you when adding the markup to your site’s content.

For more information about the different types of Schemas and properties available for Structured Data, visit Schema.org.

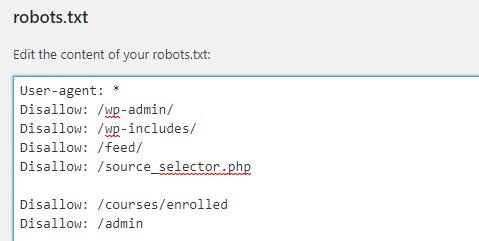

4. Review Robots.txt File

The robots.txt file, also known as the “robots exclusion protocol,” is another communication tool for search engine crawlers. This file, which is uploaded to a given site, presents an opportunity for webmasters to specify which areas of the website should not be processed or crawled. Here, certain URLs can be disallowed, preventing search engines from crawling and indexing them.

Note: Not all search engine crawlers are created equal. There’s a good chance these pages would still be crawled, but it is unlikely they would be indexed. If you have URLs listed as ‘do not index’ in the robots.txt file, you can rest easy knowing anything in those URLs will not be counted as shallow or duplicate content when the search engine takes measure of your site.

Robots.txt is a file updated over time to adjust the crawling parameters of certain directories or content on your website so your site may be allowed or disallowed for crawl and indexation. Furthermore, you can ensure the privacy of certain pages by making them inaccessible to search engines. Regardless of your intentions, it’s always wise to evaluate a site’s robots.txt file to ensure it aligns with your SEO objectives and to prevent any future conflicts from arising.

You should also review the robots.txt file for a reference to the site’s XML sitemap. If your sitemap structure gets updated, it’s imperative you update the reference in the robots.txt file, too.

5. Identify Crawl Errors, Broken Links, & Duplicate Meta Data

With the help of tools like Google Search Console, Raven Tools, Screaming Frog, Moz, or our personal favorite, SEMrush, you can efficiently identify technical SEO issues that may be hindering a site’s performance. These issues include crawl errors, like broken or dead links (404 pages), and duplicate content issues, including redundant Meta titles and descriptions.

As best summarized by Yoast, crawl errors occur when a search engine tries to reach a page on your website but fails to reach it. There are a number of different types of crawl errors that can plague a site’s performance. So, if you encounter crawl errors, it’s important to fix them quickly and correctly. We recommend checking for crawl errors and duplicate content issues as part of your site’s regular maintenance schedule.

6. Implement Appropriate Hreflang Tags

International SEO introduces unique challenges for site technical integrity. The most common of these is incorrectly adding hreflang declarations on sites offering multiple language options. The hreflang tag helps Google and other search engines list the most appropriate content according to a user’s location and browser language settings.

Imagine if your American users continually landed on a page written in German. Those users are likely to immediately return to search results, causing the site visibility to suffer overall.

Here are the top things we look for when reviewing hreflang tags:

- A consistent implementation method: This can be done either in the header of each page, or done in the XML sitemap. The important piece here is choosing one method and sticking to it.

- Each page should include tags for ALL of the regionalized versions that exist for that page.

- A default should be declared to be a fallback.

- Hreflang tags should not be used as replacements for canonicalization.

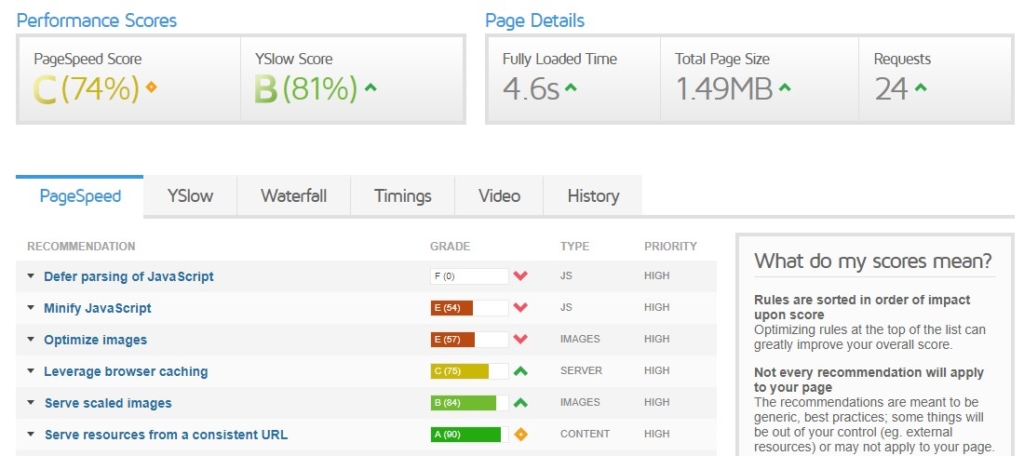

7. Evaluate Site Performance Metrics

One of the most powerful tools for technical improvement of your website for SEO is GTmetrix. Essentially, this tool provides key insights about site speed performance. In turn, you can extract insights like how to minify HTML, CSS, and JavaScript, as well as how to optimize caching, images, and redirects.

Site speed has become a significant Google ranking factor over the years as part of Google’s mission to serve users with the best possible experience. As such, it rewards fast-loading websites that offer a quality user experience (measured by bounce rate, average pages visited, time on site, etc.)

Conversely, clunky sites that take a while to render will not likely realize their full SEO or conversion potential. In addition to GTmetrix, there are a couple of other tools worth exploring to test and improve your site’s speed and performance. These are Google PageSpeed Insights and Web.Dev, both of which provide actionable guidance and in-depth analysis across a number of variables.

The recommendations given by these tools to improve page speed can vary from simple image compression to altering how the server interacts with requests. It’s a good idea to engage with a knowledgeable development team if you’re finding that the majority of recommendations read like gobbledygook to you.

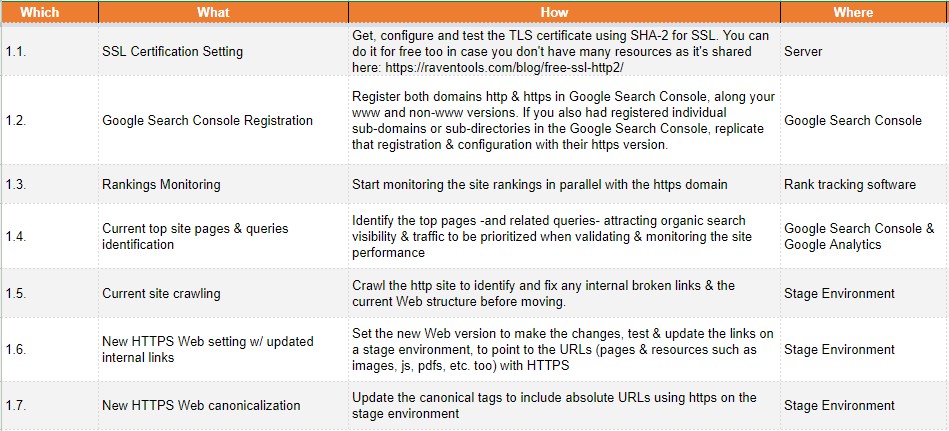

8. Check HTTPS Status Codes

A Ranking Factors Study conducted by SEMrush found that HTTPS is a very powerful ranking factor that can directly impact your site’s rankings, which is why switching to HTTPS is a must.

However, when migrating to HTTPS, you need to define proper redirects, as the search engines and users will not have access to your site if you still have HTTP URLs in place. In most cases, they will see 4xx and 5xx HTTP status codes instead of your content.

Aleyda Solis put together a thoroughly detailed checklist on how to make the HTTPS transition more seamless. But after consulting her list, you need to invest the time to assess any additional status code errors.

Using tools like Screaming Frog to generate a site crawl report can provide you with all URL errors, including 404 errors. You can also use Google Search Console to get this list, which includes a detailed breakdown of potential errors. Lastly, check-in with Google Search Console from time to time to ensure your site’s error list is always empty, and that you fix any errors as soon as they arise.

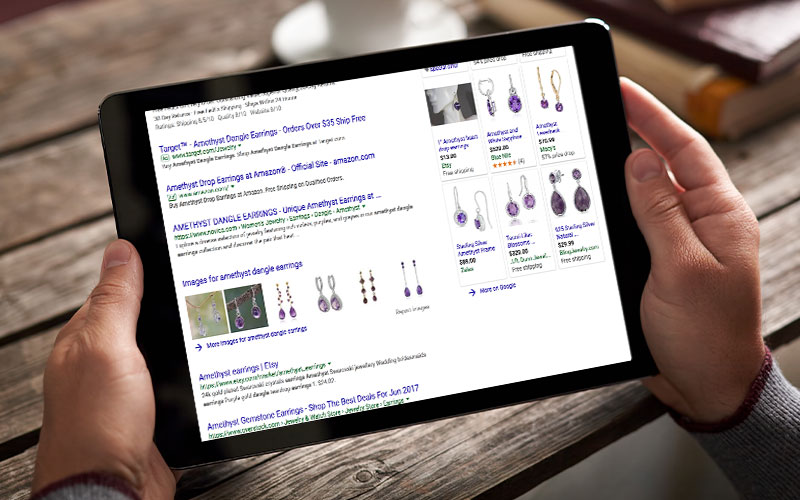

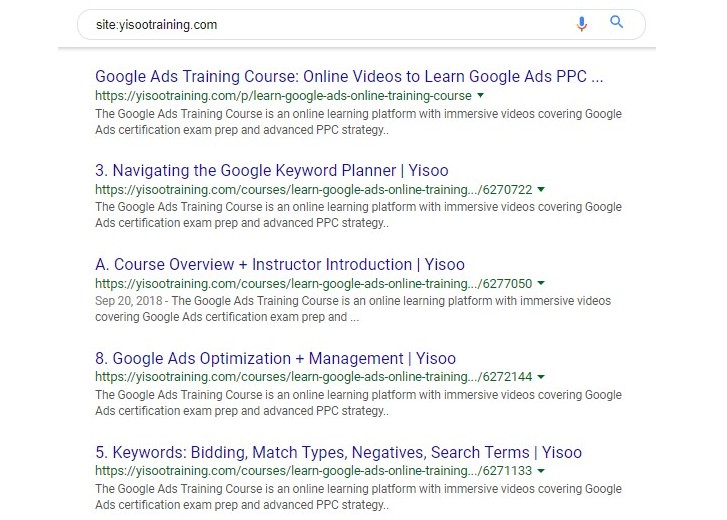

9. Google Search “Site:YourSite.xyz”

One of the simplest technical SEO tips at everyone’s disposal is performing a “site:” search of your domain right in Google’s search box. This is an easy way to see how Google is crawling and indexing your site.

A few considerations when conducting a “site:” Google search:

- If your site is not at the top of the results, or non-existent altogether, then you either have a Google manual action or you’re accidentally blocking your site from being indexed, probably via robots.txt.

- If you see redundant Meta data and multiple URLs being indexed, then you likely have a duplicate content issue and need to prevent unwanted URLs from getting indexed.

- If your meta titles and descriptions are cutting off (…), consider modifying them to be more concise.

In the above figure you can see Google is crawling various unique extensions of the same URL (/courses/), with the same Meta description being duplicated for each. In this case, we’ll likely disallow all “/courses/” URLs as they don’t offer much value and dilute the site’s SEO.

10. Ensure Responsiveness (Mobile-friendly)

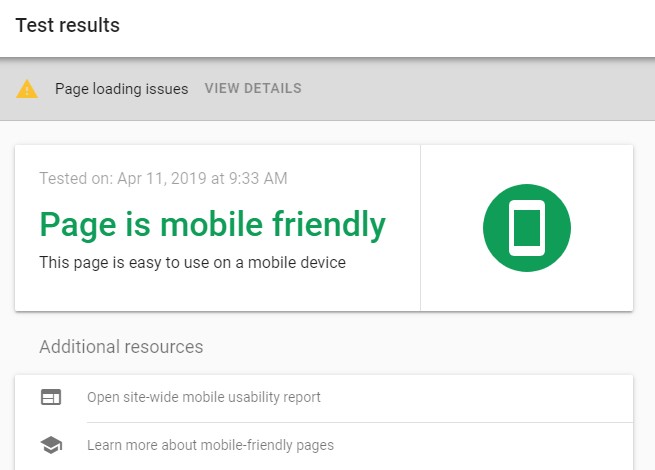

In short, sites that are not mobile-friendly will suffer in the search results. By using tools like Google’s Mobile-friendly Test, you can see just how responsive your site is on mobile devices. Such tools can also reveal more detailed technical insights that are valuable and actionable in optimizing your site’s performance.

With mobile now the dominant platform for web browsing, having a responsive site is absolutely vital – not only for SEO but also for users. If your website is still not mobile-friendly, now is the time you should prioritize a responsive redesign.

The above tips are certainly not an exhaustive list of all the technical items that need to be considered for your site, but we hope this gives you a head-start on the things to regularly check to keep your site performing at its best. What items would you add? Let us know @CaptivateSearch.